Enhancing Inclusivity for Visually Impaired Individuals in Video Games: A Study on Sound Design

Research dissertation for the Master of Music degree Applied to Visual Arts Publicly supported and presented in 2023

Federica Alfano

6/22/202435 min read

Contents

Introduction

I - Audio games

1.1 Audio Games as accessible solutions

1.2 Modern « game audio »: 3D and binaural sound

1.3 « A Blind Legend » and auditory guidance

II - Creating an accessible audiovisual work

2.1 Audio description in the media

2.2 Spatial orientation through sound

2.3 The utopia of a standardized sound language

III - Towards a paradigm of inclusivity in the world of video games

3.1 Echolocation and the challenges of audio space

3.2 « The Last of Us II » and the accessibility revolution

3.3 The transition from accessibility to inclusivity

Conclusion

References

Appendices

Introduction

For years, discussions on accessibility in video games have involved not only gamers, but also studios and research institutions.

Nevertheless, the term « accessibility » remains ambiguous and subject to various interpretations.

For example, most people talk about accessibility to indicate the difficulty of a game, while others refer to the degree of playability for someone with a vision problem, hearing loss or motor impairment.

This dissertation aims to delve into the accessibility discourse of video games specifically for individuals with visual impairments, excluding other physical challenges.

This focus is chosen for two main reasons: firstly, addressing accessibility universally is complex as each disability presents unique challenges, thus concentrating on visual impairments allows for a more focused and coherent discussion; secondly, the idea behind this analysis is to see to what extent audio design could make video games more accessible to visually impaired people because, due to the nature of their condition, they remain the people who could benefit the most from all the sound work.

In fact, during a study carried out by Microsoft researchers on a group of visually impaired people, several respondents expressed the desire to be able to play video games available on the market which are primarily designed for sighted players, in order to feel included in conversations.

I was also able to observe this problem myself when I spoke to six people with varying degrees of blindness, several of whom said that they felt excluded from their environment because most of their friends played games such as « Fortnite » or « League of Legends » which, unfortunately, are still unplayable and inaccessible for them.

The inability to access these experiences is a consequence of the primal role of visual language in video games, as the vast majority of them mostly communicate information to players through graphical elements and very little through auditory feedback. Video games require such a high level of reactivity and speed of interaction that they use different communication channels—visual, auditory, or tactile—to enhance interactions and immersion. However, the visual aspect remains the preferred channel because most people rely mainly on sight to interact with the world around them.

As a result, the visiocentric nature of video games not only hinders accessibility for blind individuals but also widens the gap between those who benefit from video games and those unable to engage with them.

Paradoxically, a gaming experience is supposed to bring people together and create communities, but all it does here is accentuate the validism of people with no vision problems.

Research indicates that visually impaired individuals possess heightened auditory abilities and can effectively navigate spatial environments through audio cues. Moreover, in recent years, technological advances in audio spatialisation have shown that incorporating sound design early in video game development not only enhances immersion but also becomes a fundamental aspect of gameplay. Nevertheless, many video game studios tend to prioritize audio integration late in the production process, leaving limited time for refinement.

How can video game sound design enhance accessibility for the visually impaired and promote their inclusivity in the gaming community?

First, we will explore the types of games that are fully accessible to visually impaired people, namely audio games.

We will then look at how studios are now applying accessibility fixes in the final production phase, and the extent to which this can impact on the game's difficulty and artistic direction.

Subsequently, we will look at examples of experiments and solutions that offer a new perspective on the accessibility issue, so that it is no longer seen as a final improvement, but as a fundamental design stage that could increase the inclusion of the visually impaired community.

In order to simplify understanding of this analysis, it is important to specify that the use of terms such as partially sighted, blind or visually impaired in this study will refer to any person with a more or less severe degree of blindness.

According to the WHO, blindness is categorized into five stages:

Moderate visual impairment – presenting visual acuity worse than 6/18 and better than 6/60

Severe visual impairment – presenting visual acuity worse than 6/60 and better than 3/60

Moderate Blindness – presenting visual acuity worse than 3/60 and better than 1/60

Severe Blindness – presenting visual acuity worse than 1/60 with light perception

Total Blindness – irreversible blindness with no light perception

I - Audio games

1.1 Audio Games as accessible solutions

An audio game is a game activity based on a sound interface, so that the user can play it without using any graphical interface.

To enable users to engage without relying on a graphical interface, audio games utilize various forms of sound feedback. These include earcons, which are brief sounds conveying information or events; auditory icons, representing real-life sounds like nature or car noises; and voice commands delivered by synthetic or pre-recorded voices.

The first audio game called « Simon » was created in 1978 by Ralph Baer and Howard Morrison, based on the game « Touch me » (published by Atari in 1972).

In this mnemonic game, players interacted with four colored buttons, each emitting a distinct sound. The objective was to replicate a sequence of sounds by pressing the corresponding buttons. While not initially designed for the visually impaired, « Simon » is now recognized as the pioneering accessible audio game for the blind community in video game history.

Since the 1980s, the most developed audio games were « text-to-speech » (TTS) games, in which a synthetic voice reads the text to the player, who can interact by writing text or selecting pre-written answers.

While such games were very common in the past thanks to their technical simplicity, it was with advances in technology, improvements in game engines and, in particular, the release of certain graphically advanced video games such as Final Fantasy, that the visual aspect took precedence over the sound aspect in terms of the budget and time allocated to development. Text-based games are highly adaptable products for several reasons.

Firstly, creating a game solely reliant on text demands advanced writing and game design skills, yet it is relatively straightforward to develop from both a graphical and technical perspective. Additionally, the widespread availability of voice synthesizer technology, some of which is free, caters to visually impaired individuals who are accustomed to using such tools daily.

In a recent interview with six visually impaired individuals, it became evident that while they appreciate text games and their narratives, they sometimes find these experiences less engaging than mainstream video games. Although text games are essentially accessible, the repetitive game mechanics often lead to premature abandonment of the adventure.

Furthermore, they observed that video games, especially those on mobile devices made accessible through voice synthesis, frequently lack articulated storytelling or narration.

Audio games thus emerge as a straightforward solution for developing a product that is accessible to visually impaired individuals at reduced expenses.

However, today's studios are experimenting more and more with new sound technologies and are trying to bring a new type of experience to all types of player.

Some video games, such as the « Mortal Kombat » series, have added sound feedback to each action, offering a new, more accessible way of playing.

It is also used in « Street Fighter 2 » (1991) a sound effect for each attack and specific to each character, produced with such meticulous attention to detail that the game is considered accessible to the visually impaired despite its highly visual aspect and its demand for reactivity in interaction.

Regrettably, the games mentioned above are still exceptions in the video game market and do not reflect the current state of video game accessibility.

They do, however, show that careful work on sound is a sine qua non for making a game accessible, which explains why audio games are still considered to be the easiest and most effective solution for creating an experience for visually impaired people.

In fact, a new type of audio game based on spatialization and binaural sound (see section 1.2) is gaining ground on the market and is proving to be a very interesting and popular option for the visually impaired community. A good example of this type of game is « A Blind Legend », which we will look at in more detail in section 1.3.

1.2 Modern « game audio »: 3D and binaural sound

Current game engines enable the creation of realistic 3D environments and the implementation of spatial sounds, enhancing atmospheres and sound sources.

Each game features one or more « audio listeners » replicating the player's auditory perspective during gameplay.

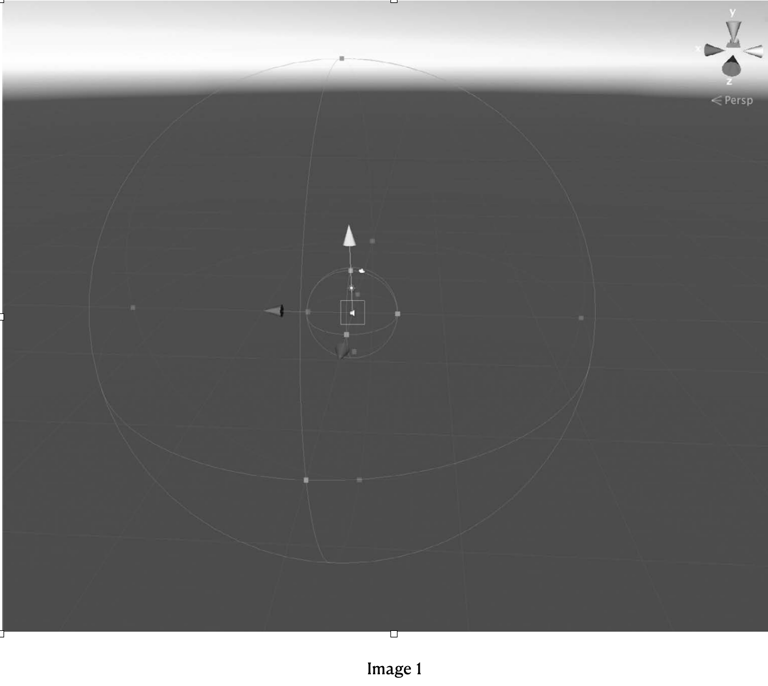

Sounds are rendered in relation to the player's point of view, allowing for auditory perception of distances using tools like attenuation, often represented by a sphere (see image 1).

By way of comparison, when we hear a sound in real life, we first try to detect where it comes from and how far away it is from us.

However, there are four parameters that our brain processes unconsciously: the intensity of the sound (Loudness), the proportion between the impulse of the sound and its reflection (Dry to reflected sound ratio), the amount of absorption of high frequencies in the air and atmosphere (Timbre), the perceived size of the sound (Width) which, for example, is perceived as larger when approaching the sound source and smaller when moving away from it.

However, despite the precision of spatializing sound in game engines, the audio in video games is typically channeled through either a headset or speakers (mono, stereo, 5.1, 7.1, etc.).

This means that reproducing auditory spatialization in a 3D space (360°) requires each player's sound equipment to replicate it, posing a significant technical challenge.

As a result, today's stereo speakers, which are among the most common channels used by gamers, are unable to recreate a precise sound space because they only provide a horizontal representation of sound with a rather limited extension (180°).

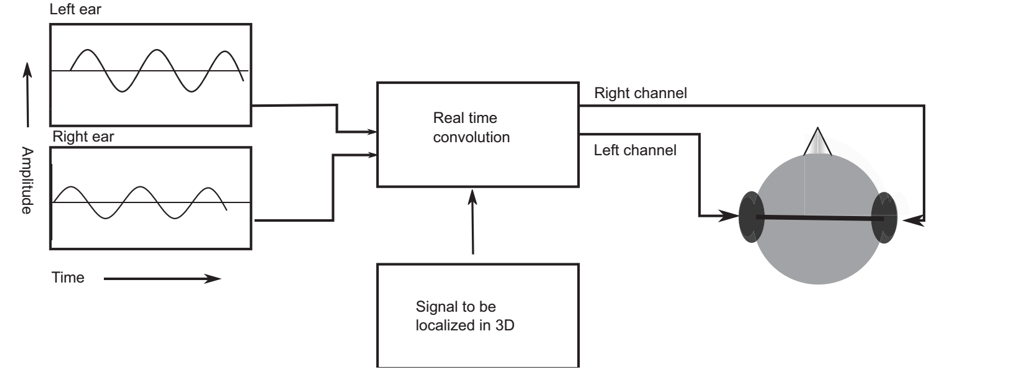

Currently, the most common way of reproducing 3D audio in a game engine is binaural sound rendered through headphones.

Unlike speakers that emit sound into a room with reverberations and physical sound sources, headphones rest directly on the ears, causing sound waves to be perceived differently (see image 2).

Consequently, when listening through headphones, the perception of a tangible sound source diminishes, replaced by the sensation that the sound originates within the listener's head.

Binaural sound, therefore, creates a mere auditory illusion that, utilizing virtual spatial coordinates, enables the perception of sound as if it were located in a space which is, in this case, a representation of our own mind.

1.3 « A Blind Legend » and auditory guidance

« A Blind Legend » is a game released in 2015, developed by Dowino, a company that is now considered a benchmark in video game accessibility.

This audio game for mobile and PC was created to be playable by both blind and sighted individuals.

It is an adventure game featuring a blind protagonist guided by his daughter. Utilizing binaural sound, players can perceive the direction of the girl's voice and navigate the character accordingly.

The same goes for combat, where the sounds can be used to guess an enemy's location and when they're going to strike (after teaching the player how the attack rhythm works), as well as whether you're receiving or inflicting damage.

After my interview with Jérôme Cattenot, Production Director at Dowino, we delved into various aspects of the game that warrant thorough analysis.

It is crucial to highlight that the game is set in the Middle Ages, necessitating the developers to conduct research on sounds that enable the reconstruction of a credible and pertinent auditory atmosphere of that era. Consequently, faced with the absence of visuals, the primary challenge was to craft an immersive setting and narrative relying solely on audio.

Moreover, each video game is the fruit of a desire to deliver a captivating yet user-friendly experience. As a result, Dowino producers aimed to refine the gestures and interactions essential for an enjoyable gaming experience, whether on PC or Mobile, while ensuring accessibility to a broad audience.

Lastly, « A Blind Legend » relies on navigation given to us through voice input: in other words, the directions indicated by the girl's voice are our only means of guiding and advancing our avatar in the game.

On one hand, we have a human voice, recreated by an actor. On the other hand, although the use of a human voice adds value to the quality of the game, it also means an extra cost in terms of voice recording, especially if one wants to translate it into several languages.

The budgetary problem was raised in particular by smaller studios, which often do not have enough budget to address certain problems in their productions, especially when it comes to accessibility.

For this reason, many games rely on existing text-to-speech programs for mobile phones (VoiceOver for Apple, TalkBack for Android). Developers can also integrate voice-activated playback or customized audio description to make the game more accessible, but it's important to bear in mind that such voices would, in most cases, be synthetic.

While discussing about « A Blind Legend » with a group of visually impaired people, I was able to observe that they appreciated the human touch provided by the guidance of a non-synthetic voice, because this experience can be likened to the one they already have in real life when they walk with sighted people.

Actually, even though the synthetic voice is part of their daily lives, this does not mean that they fundamentally appreciate it: its presence is more the result of acceptance, or even resignation, on their part. Ultimately, the addition of voice navigation makes the experience more pleasant for them, but one of the people interviewed said that, in cases where it would not be possible to have a humanized voice, they would prefer the synthetized voice to be replaced by a more thorough and advanced sound design, mainly through the use of ad hoc sound effects (SFX).

Jérome Cattenot also stressed during the interview that although the audio game « A Blind Legend » is based solely on sound inputs, it was developed using the Unity game engine, which, like its competitors, requires design in a three-dimensional map.

This means that the game design was based on a vision of space that is typical of that of a sighted person. We will therefore analyse this issue in parts II and III of our research, looking in particular at the empathy that game designers need to demonstrate in order to design an accessible game.

II - Creating an accessible audiovisual work

2.1 Audio description in the media

« Audio description (AD) is an accommodation used by people who are visually impaired or blind. It is a spoken description of the visual elements of an accompanying work, providing an accessible alternative to obtaining information that sighted individuals at the same time and place may obtain visually. Therefore, it is temporal as well as descriptive » .

Audio description can be found in a number of media, including film, television, theatre, video games and others.

It should be noted, however, that among these different art forms, theatre presents its own unique challenge: whereas other media (cinematographic works or video games) have a predetermined temporality with fixed action triggers, a play is live and can leave some room for improvisation, which means that audio-descriptions cannot be pre-recorded beforehand, at the risk of them not being synchronized with what is happening on stage.

For example, for theatrical performances, it is necessary for an « audio-describer » to be present on-site to describe visually what is happening on stage.

However, there are a number of important issues that the audio-describer must take into account in their work: firstly, after consulting with the director, they must choose which elements to transcribe as a priority to enhance the audience's understanding of the stage events.

Secondly, the audio description should not disrupt the comprehension of dialogues or monologues; thirdly, the language utilized for audio description should align with the artistic style of the piece and potentially the audience (e.g., if the audio description targets children)..

It has been noted that incorporating a human voice enhances the experience for visually impaired individuals. The live performance industry has embraced this concept by striving to offer tailored and immersive accessibility tools for blind audiences, including live translation services. Conversely, in film, television, and video games, audio descriptions are often generated using synthetic voices.

Even if this solution allows remarkable savings to be made, both in terms of budget and production time (not to mention the fact that the preparation time for an audio description script is quite substantial), it also creates an inevitable gap between the work itself and this accessibility tool. What's more, the text recited by the computer-generated voice is fairly monotonous and spoken at high speed so as not to interfere with the understanding of the dialogue. This creates a dissonance between audio-description and the artistic and human framework of dialogue between real actors.

While this approach offers cost and time efficiencies, it results in a disconnection between the content and the accessibility tool due to the monotonous and rapid delivery of the synthetic voice. To bridge this gap, some major video game developers are opting to use real actors for audio descriptions, ensuring linguistic and tonal consistency with the depicted scenes. An exemplary instance of this can be seen in Ubisoft's « Assassin’s Creed Valhalla » trailer, where the voiceover aligns seamlessly with the Viking-themed setting.

Audio description is currently the most effective method for conveying visual information to individuals with visual impairments. However, in contexts such as cinema, television, and video games, it functions as an additional layer that, while undoubtedly useful, may not always fully align with the content being described.

2.2 Spatial orientation through sound

As demonstrated in part I, ambisonic and binaural technologies enable the spatialization of sound within a 3D environment by considering the listener's perspective and reproducing sound to the right, left, in front, or behind based on the relationship between the earphones and the sound source.

Unlike audio description, which requires a certain amount of time to verbally transcribe the action of a scene, the spatialisation of sound allows the listener to immediately understand the position of the sounds emitted, and to remain independent in this process and not have to depend on an entity that has to describe the scene to him or her.

However, while audio description captures graphic, textual, and dynamic scene elements, artistic contexts cannot be conveyed solely through earcon placement.

As a result, a number of research projects in the field of accessible video games have attempted to find a good balance between audio description and audio spatialisation in order to offer a more complete and accessible experience.

One example can be found in the design of ReSAC (Responsive Spatial Audio Cloud), a computational synthesis that offers an immersive auditory experience via headphones, coupled with detailed on-screen action descriptions. Through a first-person role-playing game, we can not only perceive the environments around us and guess the location of each object, but we can also mentally reconstruct environments according to the audio description we hear.

As a result, prioritizing the information given is crucial to the experience of a visually impaired person: for example, it is more relevant to describe moving objects than colors or lights. Obviously the perception of color and light is specific to the degree of blindness of the person concerned (see Introduction), and it is for this reason that this should be taken into account when choosing the elements to be described.

If we can only rely on touch and hearing, the brain can easily reproduce movements and shapes, which is not the case for colors or other purely visual elements. However, people who have always been blind (and who have therefore never had any perception of color) can still understand the concept of colors and picture them in their minds thanks to the descriptions of sighted people.

Another example of remarkable research in the field of video game accessibility is the creation of the RAD (Racing Auditory Display) by Columbia University researchers Brian A. Smith and Shree K. Nayar .

This is a sound design system that enables visually impaired people to play car games with efficiency and a sense of control.

The researchers aimed to challenge the common belief held by many game designers that enhancing accessibility requires reducing difficulty or slowing down gameplay to accommodate player reactions. With RAD, players can promptly perceive their car's speed and direction. To accomplish this, the designers incorporated various tools for players to customize their driving experience: firstly, they introduced audio sliders, i.e. sounds that vary in volume, position and pitch to indicate the trajectory and speed of the vehicle; secondly, they used a combination of sounds and voice synthesis to indicate to the player the obstacles and turns to be made.

Hence, as demonstrated in the test below, the combination of sound language and audio description enables every player to independently and responsively enjoy a car game.

Today, car games remain largely inaccessible to the visually impaired because of the highly visual aspect required to drive a car in everyday life. It should be noted that, at the time of writing, it is still impossible and illegal for anyone with moderate or severe blindness (below 20/40 vision) to drive any means of locomotion in real life.

2.3 The utopia of a standardized sound language

The illustration of RAD has demonstrated the feasibility of associating specific sounds with various actions, such as a car's trajectory or speed, and adjusting them through volume, timbre, pitch, frequency, and other elements to create intricate and distinctive effects.

This suggests the potential for developing a universal sound language applicable across all video games to enhance their accessibility. For instance, the sound of opening a chest often resembles a wooden chest with faint metallic noises, albeit with variations in each game. I therefore tried several times to create a debate around this theory during my research for this study in order to get ideas and opinions from industry professionals.

Many acknowledged the potential benefits of a standardization for video game accessibility, but it was in particular during the interview with Jean-Baptiste Tremouiller from Be Player One that I was able to get a concrete idea of why it would be complicated to implement. While enhancing accessibility is commendable, establishing a universal sound language could still be detrimental to the artistic direction of each video game.

All video games have a precise visual and sound direction to immerse the player in a world reflecting the designers' imagination. In addition, Tremouiller asserts that audio also plays a crucial role in evoking emotions, especially through interactive music that mirrors key phases of the game adventure.

Nevertheless, in terms of sound design, game designers and sound designers are often led to use universally recognizable sounds such as the sound of coins when buying or selling something. While UX designers adhere to easily identifiable visuals, sound designers are dissuaded from introducing any new jarring sounds for actions like making a purchase, as this could unsettle users accustomed to familiar sounds. Our brains naturally associate the sound of coins with financial transactions in various media, be it a video game or a film.

While this idea might initially seem like a good basis for creating a universal sound language, the challenge arises from the fact that each sound in video games has unique characteristics specific to the game's world. For example, in the game « Red Dead Redemption 2 », the sound accompanying a purchase doesn’t sound like coins clinking but rather introduces a distinct sound of a revolver barrel rotating, typical firearm of the game's Wild West universe.

While establishing standardized codes is possible, selecting a sound or visual element that universally represents an action across all video games could be viewed as a limitation on artists' creativity.

Today, Audio plays a significant role in shaping a game's identity and enhancing immersion. It is often conceptualized early in the production process by audio teams. Game designers meticulously craft a visually and aurally stimulating experience to ensure engagement and customization for the player..

Furthermore, accessibility for the visually impaired is often seen as a layer of parameters added at the end of the production of a video game to improve playability. Similarly, as previously discussed, the term « accessibility » is frequently misinterpreted as referring to a game's level of challenge rather than facilitating access for individuals with impairments.

This aspect has undergone standardization in recent years and typically needs to adhere to specific guidelines to classify the game as adequately accessible, including:

Subtitles: Does the game feature subtitles, and if so, are they present in all the dialogue? Are they appropriately sized? Are they clearly visible against the background? Does the subtitle identify the speaker? What choices does the player have to adjust these components?

Visual acuity : Is the text legible at an appropriate distance? Do the UI elements contrast effectively with the background? Are there options within the game to adjust these elements?

Color Blindness : Is there a distinction in key elements for individuals with the three primary types of color vision deficiency? Does the game offer a colorblind mode or options to adjust the color palette?

Controller remapping : Does the game allow players to change the buttons that perform actions? Does it provide controller layout presets to choose from? Does it allow players to change other aspects of the controller, such as the sensitivity and dead zones of the analogue stick?

We will analyze specific video games that have demonstrated the consideration of accessibility since pre-production while also adhering to the aforementioned standards, thereby enhancing the player experience

III - Towards a paradigm of inclusivity in the world of video games

3.1 Echolocation and the challenges of audio space

The 3D worlds and maps of video games have always been designed according to the visual perception that sighted people have of the environment.

Similarly, movement is limited to four directions in most video games, namely front, back, right and left (mostly by using the arrow keys on the keyboard or a controller).

A study conducted by University of Melbourne researchers on blind gamers revealed that this limited movement can be frustrating for them.

Blind individuals rely heavily on touch and hearing for orientation, rather than visualizing a 3D environment in four directions.

In fact, visually impaired people use touch and hearing to find their way around, and contrary to what many people assume, they do not visualise an image of the environment in their head in order to get around (unless they were sighted at some point in their life). In fact, several of them said they regularly used echolocation to find their way around in real life.

Echolocation is the capacity of specific mammals, including humans, to utilize echoes and sound pulses they emit to form a mental representation of their surroundings.

This ability enables blind individuals to perceive the dimensions, composition, and proximity of objects, and anticipate potential collisions with objects or moving entities.

For years, audio teams have been trying to authentically replicate soundscapes using reverberations and other sonic tools.

In a gaming scenario, for instance, when our character is in a cave and a metal object drops 3 meters away, the sound effects, reverberation and echoes should convey this spatial awareness. Yet, grasping this auditory landscape is intricate. We have observed that binaural or ambisonic mixing (requiring headphones) can accurately position a 3D object within an auditory space. Nevertheless, duplicating the precise echoes generated by an object's size and material under specific physical conditions necessitates extensive computations and consideration of numerous variables. Consequently, currently, pinpointing an object in a virtual realm through echolocation remains unattainable because the artificial audio environment fails to provide adequate information to the brain for discerning all the essential object details without visual assistance.

The idea of being able to echolocate an object in a video game would not only increase realism and immersion for sighted people, it would also be a revolution for accessibility for visually impaired people.

Nevertheless, even if the technical demands and the human and technological costs are too great for this to be achieved in the near future, technological advances (particularly in the field of immersive audio) suggest that we will be able to get closer to sound realism.

Major video game developers are actively exploring innovative audio solutions to enhance accessibility, with significant strides made in recent years.

The launch of « The Last of Us II » marked a pivotal moment in the realm of accessibility, showcasing notable progress in this domain.

3.2 « The Last of Us II » and the accessibility revolution

On June 19, 2020, the renowned game developer « Naughty Dog » released its third-person adventure game « The Last of Us Part II » after 7 years of development, following the success of its predecessor released in 2013.

This installment garnered acclaim from critics and players alike for its gameplay and storyline, which were key features of the original game. However, the sequel made a significant impact on accessibility by introducing innovative features that have led many video game journalists and members of the blind community to declare that « The Last of Us Part II » stands out as the most accessible game currently available.

Notably, Steve Saylor, a blind gamer and content creator, highlighted how the gameplay has transformed accessibility for individuals with various disabilities (such as visual, auditory, or motor impairments), asserting that no other game has surpassed its accessibility standards. While some recent titles, like « God of War Ragnarök », are beginning to draw inspiration from its advancements, « The Last of Us Part II » remains unrivaled in this regard .

In the video above, we can find a non-exhaustive list of the accessibility elements introduced, but we will primarily focus on those most relevant for our analysis.

Firstly, upon commencing the game, the accessibility menu serves as the initial gateway to the gaming experience. Initially, this may seem cumbersome for individuals with or without disabilities. However, the preset difficulty and trouble settings swiftly transform the experience into a pleasant one.

Noteworthy options for individuals with visual impairments include color-coded visual aids. For instance, activating this feature turns the entire environment gray, highlights the character in green, and tints enemies in red, enhancing visibility of crucial elements on the screen. Furthermore, utilizing the touchpad at the Playstation controller's center enables zooming into specific areas of the scene or user interface, facilitating detailed examination of the environment.

This functionality proves particularly beneficial for individuals experiencing varying degrees of blindness, ranging from stages I to III (refer to the Introduction for details on stages of blindness).

In particular, there are two audio-based features that we will examine in more detail, namely navigation assistance and « scan » (sonar).

Navigation assistance consists of using earcons to render any element that is important to the gameplay. This could be, for example, the sound of a door as you walk past it, or a beam at head level that prevents you from moving forward without crouching down. The diversity and spatial distribution of these sounds help indicate the direction to follow without relying on verbal instructions.

The sounds are distinct and sufficiently audible to indicate the required game action and guide the player in the narrative progression.

The audio team of « The Last of Us II » incorporated the concept of echolocation (refer to the previous section) to create the scan or sonar feature, i.e. the ability to press a button on the controller to activate the detection of the surroundings, and get audio feedback on all the important gameplay elements.

These spatialized sounds are repeated and intensify as the player approaches them, indicating which objects one can pick up and even the location of enemies.

It's interesting to note that the game's main enemies, known as « clickers », rely on echolocation due to their blindness in order to locate and eliminate the player.

They emit regular screams, which can alert them to the player’s whereabouts, leading to an attack if excessive noise is made. Consequently, stealth and infiltration tactics play a central role in encounters with these enemies.

During our interview, Jean-Baptiste Tremouiller highlighted that the directors and game designers of « The Last of Us II » dedicated significant effort to addressing accessibility concerns. This preparatory work likely occurred prior to the game's development to ensure a cohesive and meaningful gaming experience aligned with the player's preferences. He further emphasized that any modifications to the user experience do not diminish its interest for the individual engaging with it.

One might perceive that the aforementioned features could make the game easier, but the difficulty is still well measured despite the accessibility options activated: Naughty Dog has therefore succeeded in creating experiences that are no longer based on the easy/difficult dichotomy, and have here designed a game that adapts to everyone's abilities and preferences in terms of difficulty.

To achieve this result, the studio had to address these important issues during the game's pre-production phase: simplifying the difficulty would certainly have been a shortcut to satisfying some of the audience, but this solution would have been no more than applying a simple band-aid to the problem of accessibility.

3.3 The transition from accessibility to inclusivity

In the past, a distinction has frequently existed between video games designed for sighted individuals and those created for blind players.

Audio games, for instance, primarily cater to blind individuals as their target audience, although they are accessible to everyone, whereas most video games on the market are aimed at an audience made up mainly of sighted people, despite improvements in accessibility.

In recent years, numerous researchers have endeavored to promote the concept that there should be no differentiation in target audience, and that every video game should consider all potential cognitive and physical impairment.

Notably in 2021, a team of Japanese researchers led by Masaki Matsuo introduced a term that is increasingly prevalent in the community, namely « inclusivity ».

According to Mr. Matsuo's team, the notion of inclusiveness revolves around the belief that a game should offer enjoyment to both sighted and blind individuals.

This implies moving away from compromising the visual aspects for audio games, aiming to enable blind individuals to engage with video game experiences that were previously too intricate. Consequently, it becomes imperative to adopt a design approach akin to the pre-production phase of a game to craft an experience accessible to all.

Mr. Matsuo's team specifically mentions « multisensoriality » as a potential method to bridge the accessibility divide and foster more inclusive experiences.

By expanding the current array of video game gameplay, particularly through the incorporation of additional audio and tactile mechanics (haptics), new adaptable and inclusive experiences could be created, rather than merely simplifying the challenge level.

A number of projects have been set up to raise awareness of these issues among the younger generation.

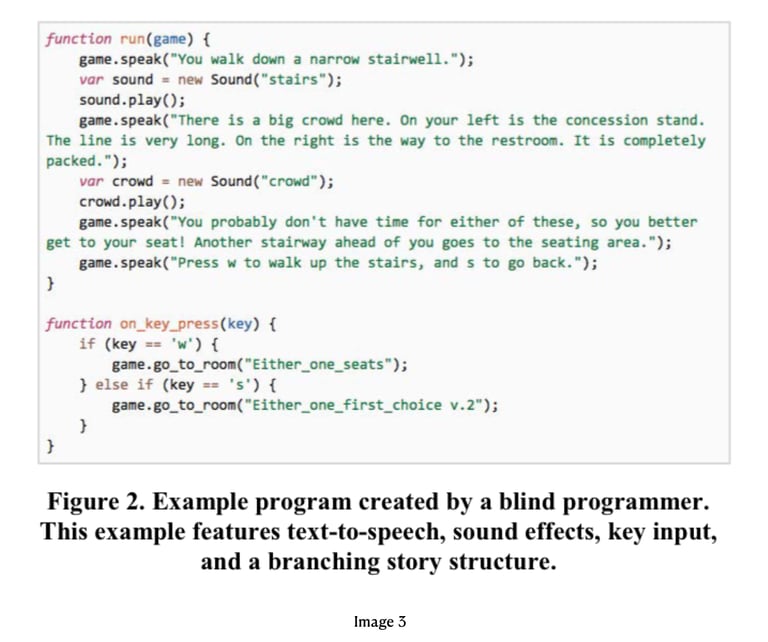

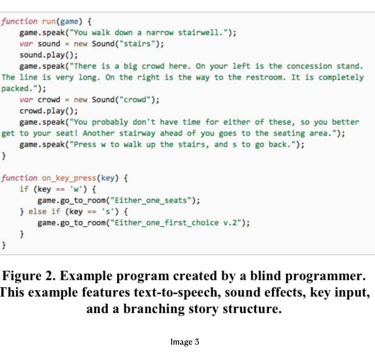

For example, the « Bonk project », designed by a research group at the University of Colorado, enables blind people to easily learn and write code that will enable them to add the following functions to the game: writing a scenario with branching choices, voice reading, sound effects, keyboard input (see image 3).

This engine plays a crucial role in educating and enhancing technical skills within the visually impaired community. It enables them to advocate for necessary changes in the industry to make video games intuitive and playable.

Particularly, advancements in audio field and management, as demonstrated in audio games, are pivotal in fostering independence for blind players across various audiovisual mediums.

The idea behind this research project is therefore to give people involved in accessibility research the tools to better understand visually impaired people’s needs and give them the opportunity to create their own idea of a video game experience.

Therefore, with an extensive sound library at their disposal, we can analyze their sound preferences and associations.

Inclusivity also introduces the idea of « empowerment », enabling visually impaired individuals to express their desired experiences.

"Bonk" has the potential to amplify the voices of those with vision impairments and offer a fresh perspective on accessibility challenges: it is a tool that can offer valuable insights on fostering a more accessible and fair future for all.

Conclusion

Initially, we demonstrated that audio games serve as a direct solution for visually impaired individuals, relying solely on auditory cues rather than visual elements.

« A Blind Legend » was developed with this principle in mind, aiming to increase awareness of vision impairments among those with sight.

While this game offers an educational experience, it may not be as comprehensive as other games available. The focus on storytelling, utilization of binaural technology, and incorporation of human voices (rather then synthetized) distinguish it from traditional video games in terms of accessibility. Nonetheless, the absence of visual components accentuates the distinction between audiences, categorizing audio games primarily for the visually impaired and other games for sighted individuals.

Nevertheless, there is a noticeable endeavor by video game studios to enhance the accessibility of games to a wider audience. Incorporating standards like audio descriptions, subtitles, and voice or sound navigation contributes to a more inclusive and enduring gaming experience for the visually impaired community. However, these elements are frequently integrated late in the development process and may not consistently align with the artistic vision of the game. While such efforts are common in theatrical productions, they are seldom prioritized in the video game sector.

We also analyzed the sonar option designed by Naughty Dog for « The Last of Us II », which serves as an alternative to the voice navigation offered by other games such as « Blind Legend ». While echolocation navigation in a virtual environment remains unattainable, the system devised by the Californian studio signifies advancements in this area, introducing a fresh perspective on audio spatial awareness.

This inclusive design approach has enabled diverse functionalities, accommodating various impairments.

Various research projects by studios and universities have also led to the creation of a new paradigm in which accessibility is not seen as a way of facilitating existing gameplay, but as a means of designing a different, balanced experience that is still in keeping with the designers' artistic vision.

Projects such as Bonk, ReSAC and RAD, which we analyzed before, aim to make visually-impaired gamers independent thanks to an accomplished sound design.

It is noteworthy that the majority of references and projects cited in the bibliography of this study were published post-2018.

This indicates that accessibility research in video games has recently emerged as a focal point of interest in scientific inquiry.

This field is still in its nascent stages but is rapidly evolving and poised for further advancement in the future.

Throughout the drafting of this analysis, numerous articles have been consistently published, underscoring the growing interest from individuals within and beyond the realm of video games on this matter.

For years, accessibility was seen as something superfluous and requiring a high a budget for it to be taken into account by all game developers.

And because accessibility for the visually impaired is intrinsically based on audio design, which is itself often a late stage in the production phase, accessibility options were developed hastily and not thoroughly.

The design of accessibility options was often seen as adding complexity to the production of a video game, which already required a great deal of organizational work. There are often budgetary and cost/benefit discussions that could slow down the development of such features.

Nevertheless, we have been able to analyse through the journey from audio games to the discussion on the inclusivity of video games that the creation of a sophisticated audio design can enhance this field by creating immersive sound environments, incorporating captivating sound effects and voice guidance, and seamlessly blending non-synthetic audio descriptions with the game world.

It is crucial to comprehend that the purpose of this study is not to offer a solution or answer to the problem, as we have seen that this in-depth sound design requires considerable human and technological effort, and that there are many issues concerning artistic identity and gameplay that would hinder this work.

The real aim of this study is to create a debate and open up the possibilities for new ideas or research efforts that could contribute to improvements in the field of accessibility for visually-impaired people in the future.

In conclusion, current research on video game accessibility and the development of a more immersive and accurate sound environment for interactions appear as highly promising factors in ensuring that the visually impaired community feels fully integrated into the gaming community.

References

Videogames accessibility

Anderson, S. L. R., & Schrier, K. (2022). Disability and Video Games Journalism: A Discourse Analysis of Accessibility and Gaming Culture. Games and Culture, 17(2), 179–197. https://doi.org/10.1177/15554120211021005

Andrade, R., Rogerson, M. J., Waycott, J., Baker, S., & Vetere, F. (2019, May 2). Playing blind: Revealing the world of gamers with visual impairment. Conference on Human Factors in Computing Systems - Proceedings. https://doi.org/10.1145/3290605.3300346

Andrade, R., Rogerson, M. J., Waycott, J., Baker, S., & Vetere, F. (2020, April 21). Introducing the Gamer Information-Control Framework: Enabling Access to Digital Games for People with Visual Impairment. Conference on Human Factors in Computing Systems - Proceedings. https://doi.org/10.1145/3313831.3376211

Brown, M., & Anderson, S. L. R. (2021). Designing for Disability: Evaluating the State of Accessibility Design in Video Games. Games and Culture, 16(6), 702–718. https://doi.org/10.1177/1555412020971500

Drossos, K., Zormpas, N., Floros, A., & Giannakopoulos, G. (2015, July 1). Accessible games for blind children, empowered by binaural sound. 8th ACM International Conference on PErvasive Technologies Related to Assistive Environments, PETRA 2015 - Proceedings. https://doi.org/10.1145/2769493.2769546

Hersh, M., & Leporini, B. (n.d.). Serious Games, Education and Inclusion for Disabled People Editorial Introduction: The Need for Serious Games for Disabled People. http://www.whitestick.co.uk/ongames.html

Kane, S. K., Koushik, V., & Muehlbradt, A. (2018). Bonk: Accessible programming for accessible audio games. IDC 2018 - Proceedings of the 2018 ACM Conference on Interaction Design and Children, 132–142. https://doi.org/10.1145/3202185.3202754

Khaliq, I., & Torre, I. dela. (2019). A study on accessibility in games for the visually impaired. ACM International Conference Proceeding Series, 142–148. https://doi.org/10.1145/3342428.3342682

Kulik, J., Beeston, J., & Cairns, P. (2021, August 3). Grounded Theory of Accessible Game Development. ACM International Conference Proceeding Series. https://doi.org/10.1145/3472538.3472567

Matsuo, M., Miura, T., Yabu, K. I., Katagiri, A., Sakajiri, M., Onishi, J., Kurata, T., & Ifukube, T. (2021). Inclusive Action Game Presenting Real-time Multimodal Presentations for Sighted and Blind Persons. ICMI 2021 - Proceedings of the 2021 International Conference on Multimodal Interaction, 62–70. https://doi.org/10.1145/3462244.3479912

Smith, B. A., & Nayar, S. K. (2018). The RAD: Making racing games equivalently accessible to people who are blind. Conference on Human Factors in Computing Systems - Proceedings, 2018-April. https://doi.org/10.1145/3173574.3174090

Swaminathan, M., Pareddy, S., Sawant, T., & Agarwal, S. (2018). Video gaming for the vision impaired. ASSETS 2018 - Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, 465–467. https://doi.org/10.1145/3234695.3241025

Swarts Jessica, « A Look at Audio Games, Video Games for the blind », Text-based and interactive Fiction

https://www.inverse.com/article/13331-a-look-at-audio-games-video-games-for-the-blind,

uploaded on 25/03/16, accessed on 15/07/22Wilds Stephen, « The Last of Us 2 goes beyond accessibility and difficulty levels »

https://www.polygon.com/2020/7/2/21310396/last-of-us-2-accessibility-vision-difficulty-gameplay-opinions

Uploaded on 02/07/2020 , accessed on 21/04/2023

Theatre accessibility

Ferziger, N., Dror, Y. F., Gruber, L., Nahari, S., Goren, N., Neustadt-Noy, N., Katz, N., & Erez, A. B. H. (2020). Audio description in the theater: Assessment of satisfaction and quality of the experience among individuals with visual impairment. British Journal of Visual Impairment, 38(3), 299–311. https://doi.org/10.1177/0264619620912792

Franzen, R. (2019). One ear on the stage and one in the audience?–Audio description and listening to theatre as dramaturgical exercise. South African Theatre Journal, 32(1), 48–62. https://doi.org/10.1080/10137548.2019.1614975

Vander Wilt, D., & Farbood, M. M. (2021). A new approach to creating and deploying audio description for live theater. Personal and Ubiquitous Computing, 25(4), 771–781. https://doi.org/10.1007/s00779-020-01406-2

Whitfield, M., & Fels, D. I. (2013). Inclusive design, audio description and diversity of theatre experiences. Design Journal, 16(2), 219–238. https://doi.org/10.2752/175630613X13584367984983

Blindness

Cattaneo, Z., and Vecchi, T. (2011) « Blind Vision: The Neuroscience of Visual Impairment », Cambridge, MA: MIT Press.

NVISION, « Driving With Visual Impairments: Statistics & Facts », uploaded on 28th November 2022, accessed on 28th April 2023

https://www.nvisioncenters.com/education/driving-with-visual-impairments/#:~:text=While%20blind%20individuals%20cannot%20drive,relatively%20good%20field%20of%20visionOMS, uploaded on 13th October 2022, accessed on 26th April 2023

https://www.who.int/fr/news-room/fact-sheets/detail/blindness-and-visual-impairmentYoung Emma, « How do blind people who’ve never seen color, think about color? »

The British Psychological Society

https://www.bps.org.uk/research-digest/how-do-blind-people-whove-never-seen-color-think-about-color

Uploaded on 28th March 2019, accessed on 19th February 2023

Game Audio

Sinclair Jean-Luc, Principles of Game Audio and Sound Design, Routledge, 2020

Waves, « 3D Audio on Headphones: How Does It Work? », https://www.waves.com/3d-audio-on-headphones-how-does-it-work , uploaded on 04/02/2021, accessed on 03/08/2022

Wikipédia, Earcon, https://fr.wikipedia.org/wiki/Earcon, dernière modification 12/08/20, accessed on 15/07/22

Videos

« Assassin's Creed Valhalla: [Audio Description] Cinematic World Premiere Trailer | Ubisoft [NA] »

https://www.youtube.com/watch?v=WWDxCQwqMao, uploaded on 30/04/20, accessed on 15/08/22« Racing Audio Audio Display - for Blind Gamers », Columbia University,

Uploaded on 16th February 2018, accessed on 30th April 2023

https://vimeo.com/256104155?embedded=true&source=video_title&owner=9215117« Red Dead Redemption 2 | Buying and Customizing Weapons at the Gunsmith » par Indo-J

uploaded on 25th October 2018, accessed on 18th April 2023

https://youtu.be/JBBNe9IuCk0?t=174« The Last Of Us Part II - MOST ACCESSIBLE GAME EVER! - Accessibility Impressions » par Steve Saylor

https://www.youtube.com/watch?v=PWJhxsZb81U

uploaded on 12/06/2020, accessed on 21/04/2023

Find Full PDF with footnotes here

Appendices

Interview with Jérôme Cattenot, Production Director at ‘Dowino’, on the design of the game ‘A Blind Legend’ Interview conducted on the ‘Google Hangouts’ platform on 18th March 2022

Transcription by the author

1. Did you have any problems with the translation? I noticed that the game is only in French and English. Was it a question of budget?

It's a question of budget, because the game is free. If we’d gained money from it, we would have introduced other languages. And we've been asked to do that a lot of times. In the Apple Store or App store, a recurring comment was ‘Spanish, please’. So we only did the film in English and French and we didn't have any translation problems because we were there to direct the actors even in English.

2. Do you think that using a synthetic voice would have solved the translation problem while maintaining the same level of experience?

It would have solved the translation problem but it would have broken the whole experience. There was already a lot of debate about the synthetic voice, which was used to give directions and therefore had no character. Some people found it really problematic. They thought it sounded like a GPS voice. So, if we'd had that for all the characters, it would never have worked because the characters had to be believable to give the game realism. The game takes place in the Middle Ages, so it's a very realistic atmosphere but not at all magical like ‘Lord of the Rings’. All the sounds had to be realistic: the mix and the voices. So if you use computer-generated voices, it doesn't work at all. It might work for making robots but not at all for characters for an adventure in the Middle Ages.

3. Given the progress of technology, do you think that a more ‘human’ synthetized voice could improve the experience and accessibility for the visually impaired?

No, not really. Blind people have no problem with synthetized voices, they're used to hearing it all the time, so they don't have a problem with being given directions in synthetized voices. Now the question is, ‘Would the games be more accessible if there were more voices? On the one hand, yes, because for example there are types of games that are text-based games where you are the hero, a bit like in a book. There's a lot of text and the characters speak according to the text. But imagine using computer-generated voices to make these games accessible. It wouldn't be very relevant because there are already voices.

4. For the design of this game, did you use a 2D/3D graphics card and place the sounds in space, or did you create a sound map directly?

The game was actually coded on Unity, so the sounds are actually placed on a map. They are placed spatially. The player moves around in them. The sounds aren't themselves binaural, but they're in a 3D environment, so obviously if I turn my character around, I have the impression that I'm really turning: what I heard on the left, I'll hear behind me; what I heard on the right, if I turn around, I'll hear on the left and so on.

5. Did you record sounds in Ambisonics or were stereo or mono sounds spatialized?

We made very few recordings in Ambisonics and that was mainly for sounds that weren't spatialized like the wind or the sound of leaves. In the first prototype we spatialized these sounds a lot, but we had blind people test them and they said it didn't work because it didn't resemble reality. There's no such thing as the wind on the left. Wind is everywhere, in the sense that it moves everything around us. In any case, most of the sounds were certainly in mono so that they could be spatialized.

6. How did you find the right balance between ambient sounds and interaction sounds? Was this established at the design stage, or did you go through several iterations and trials?

When we were writing, we were already alternating between three types of phase: there are cinematic phases, often with dialogue, where you don't have to do anything, you just have to listen; movement phases, where you have to move forward in the place where you are; and combat phases, where you don't move any more, but you have to strike at the right moment depending on what's attacking you.

So, as soon as we started writing, we started thinking about the alternation of all that, and then it was a matter of level design. It was while we were creating these maps, putting the sounds in space, that we also tested the length of the locations: how long would it take me to get through this place? Where do we put the fights? The game alternated between exploration and combat, and we also had to understand the rhythm. For example, the more difficult a fight is, the longer it takes. So it built up little by little because even though we conceptualized the phases when we were writing, sometimes we realized when we tested them that they were too long. For example, there were moments when you were going through a forest and it wasn't very interesting, so we redesigned it to introduce more interaction.

7. Have you ever had problems with sound overload? For example, during the tests did you realize that there was too much audio information?

We encountered the opposite problem. Because of technical constraints we had to use very little sound. So we asked ourselves how we could bring a medieval village to life with a limited amount of sound. So we used the sounds of bells, chickens, pigs, passing carts and children running. After that, it's all about mixing and distance. In other words, you have to separate the sounds according to the route you want to take. To sum up, we didn't have any problems with overload, but we did have a huge amount of mixing to do, almost 100 days of mixing.

8. It's very interesting that you're taking a minimalist approach to sound because, graphically, it's often the opposite: there's too much information on the screen.

Indeed, we had a twofold challenge: realism and the user experience. Initially it was a game for the blind, but we quickly realized that it shouldn't be just a game for the blind. We had to make a game they could play, of course, but also one that was interesting for non-blind people so they could see what it's like to be blind, to have only sound. All that changed the game because blind people are very good at finding their way around with sound, while sighted people are very bad. So we had a bit of a challenge in terms of getting people to find their way in space with very little sound. For example, in a forest, you put in a little waterfall, a little bird singing and a bee and it's easy to find your way around. But if you add other sounds, it becomes complicated to find your bearings. You can still have intermittent sounds like a bell ringing every 30 seconds. But it would still be seen from a distance, so it would have less effect on spatialisation.

9. Concerning ‘A Blind Legend’ on mobile, so not on Windows: did you find any constraints regarding gestures?

That's a very good question! It was a big challenge. We knew it had to be simple. The movement part was simple: left, right, up, down. Even the sword strokes with the diagonal thumb movements, we found very satisfying. We had to do a lot of thinking and testing with blind people before we came up with the three-finger up and down movements.

10. I've noticed that you've used a lot of internal objective sounds like breath and heartbeat. Was this designed to replace graphical feedback like the life bar? Or was it really geared towards the experience?

Blake's voice is mixed in a different way to feel like it's us talking. In fact, all the breathing and heartbeats were to indicate life and that's better than a voice telling you ‘you're going to die soon’ because it keeps you immersed in the experience.